Agentic AI Models

💬 Overview

Agentic AI models are systems, often powered by large language models (LLMs), that are designed to operate autonomously or semi-autonomously in pursuit of a goal. Rather than simply responding to prompts, these agents take initiative: they plan actions, execute them, observe the outcomes, and iterate based on feedback.

A typical agent breaks a complex task into manageable steps, chooses appropriate tools, and cycles through this process repeatedly, refining its approach along the way. These models maintain their own chain of thought and continue working without requiring new human input at every step.

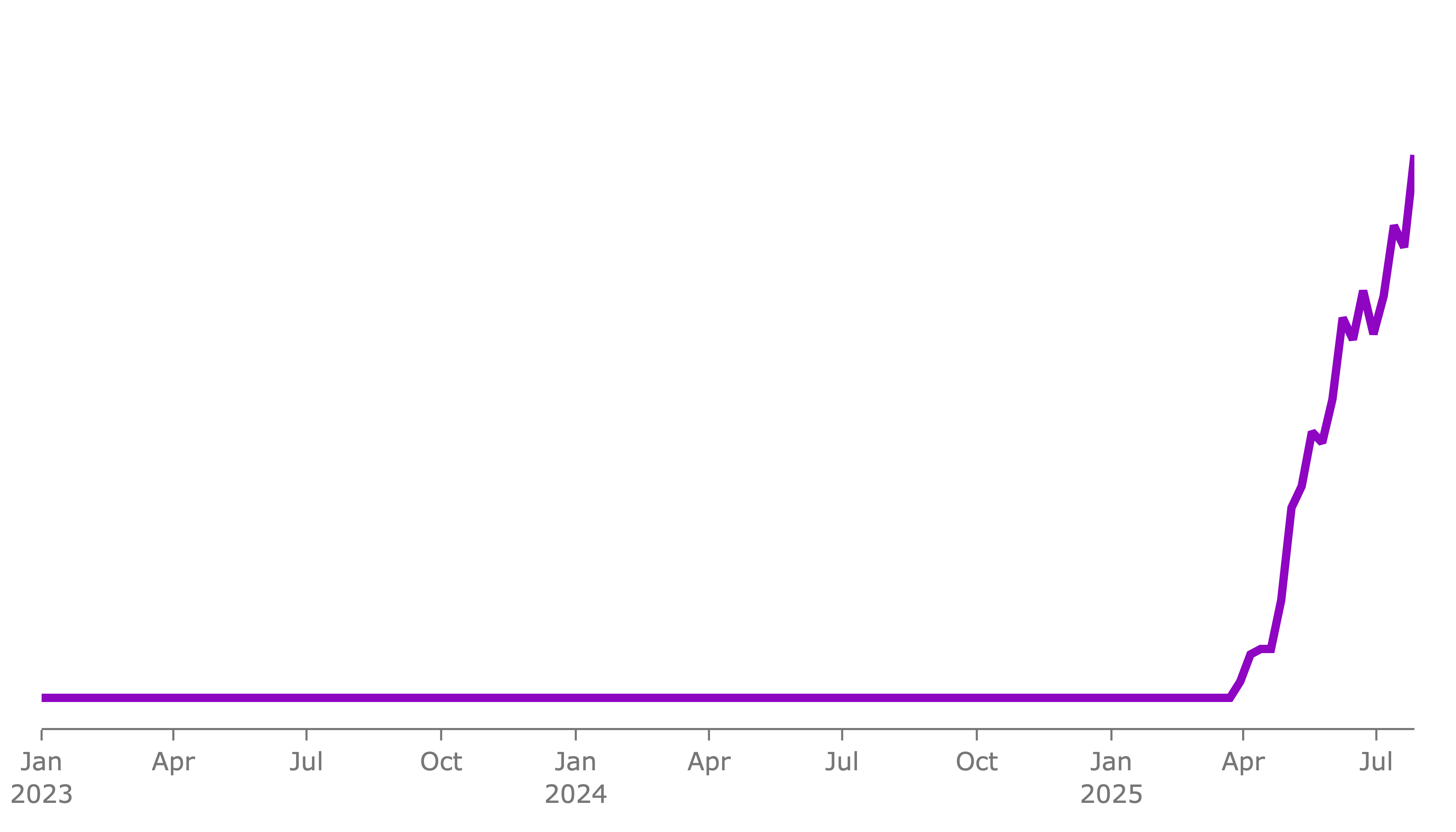

🌍 Worldwide Search Trend: Agentic AI Topic (Jan 2023 - July 2025) Source: Google Trends

🌍 Worldwide Search Trend: Agentic AI Topic (Jan 2023 - July 2025) Source: Google Trends

Interest in the agentic AI topic has grown rapidly as these systems begin to show real-world potential. The chart above illustrates the rise in global search interest for the topic of “Agentic AI” from early 2023 through mid-2025.

💡 Example Applications

Virtual Research Assistants: Agentic models like AutoGPT can autonomously gather and summarize information. For example, the agent can perform market research by searching the web, analyzing product reviews, and writing a polished summary with minimal human intervention.

Agents in Simulated Environments: Agentic systems can also live inside virtual worlds and learn on their own. For example, Voyager used GPT-4 to explore Minecraft independently writing and refining code, building a skill library, and mastering mining, crafting, and survival through repeated interaction with the environment.

Autonomous Code Manager: Agents such as Devin act like self-directed junior developers. They can navigate codebases, fix bugs, implement features, and even deploy apps. Devin has been shown to autonomously resolve real GitHub issues and manage workflows end-to-end with little supervision.

🤖 How Are Agentic Models Different from Traditional LLMs?

Autonomy: A normal LLM won’t do anything unless prompted each time. It answers your question and stops there. An agentic AI, in contrast, will continue working toward a goal without needing new instructions at every step

Long-Term Memory: Out-of-the-box, most LLMs are amnesiacs. They only remember what’s in the current chat (and even that is limited by the context length). If the conversation resets, all is forgotten. Agentic systems tackle this by giving the AI long-term memory

Taking Action: Traditional LLMs live in a text-only world. They produce an answer, but they cannot directly act on external systems. Agentic models are designed to take action.

Planning: When you prompt an LLM with a complex problem, it will try to solve it in one go (perhaps with some chain-of-thought). Agentic AIs make planning explicit.

Iterative Self-Improvement: Because they operate in a loop, agentic systems can learn from their mistakes on the fly. A static LLM, if it produces an incorrect answer, won’t know unless a human corrects it. But an agent can be built to notice failure signals (like an error message) and then adjust.

🧩 Putting It Together: New Capabilities

Agentic models add persistence, initiative, tool-enabled actions, and iterative planning on top of what vanilla LLMs can do. These differences let agents tackle more complex, extended tasks. They also introduce new challenges (like keeping the agent focused, safe, and within bounds). Next, let’s peek under the hood at how these agents function.

🔄 Inside the Agent Loop

Most agentic AI systems share a common control flow. At their core is still an LLM “brain” deciding what to do, but now it’s embedded in a loop of Plan → Act → Observe → Reflect.

Pseudocode for an Agentic AI’s Control Loop

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

goal = "Make a dinner reservation at a nice Italian restaurant for 2 people at 6:30PM."

# Long-term memory of past interactions

memory = []

# Main control loop

while True:

# Plan: decide the next action

plan = agent.brain.plan_next_step(goal, memory)

# Act: perform the planned action

action = agent.execute(plan)

# Observe: capture the result from the environment

result = environment.get_feedback(action)

# Remember: store this step in memory

memory.append((plan, action, result))

# Exit if the goal is achieved

if agent.check_goal_completed(result):

logger.info("Goal achieved!")

break

# Reflect: adjust strategy based on feedback

agent.brain.reflect(result, memory)

On each iteration, the LLM is fed the current context. This context includes the overall goal, recent conversation or results, and a request. The LLM’s output typically contains both a thought (justification or reasoning) and an action command. For example, it might produce: Thought: “I should search for relevant papers.”; Action: SEARCH["latest research on X"]. The agent framework parses that and actually executes the SEARCH action, then takes the returned results and feeds them back into the LLM on the next loop as an observation.

Crucially, the agent loop also involves updating memory and using feedback. The agent appends important results to its memory (which could simply be keeping past observations in the context window, or using a vector store for longer-term storage). The LLM can then recall earlier facts or adjust plans. If an action failed or returned an error, the next iteration can include that info, prompting the LLM to try a different approach. This iterative cycle continues, giving the agent multiple “shots” at the problem instead of just one.

⚙️ Components of an LLM-based Agent

Here are some of the major components that make up an LLM-based agent:

LLM “Reasoning Engine” (Planner): The core language model that plans the next action based on the current goal and memory.

Memory Store: Many frameworks integrate a vector database for long-term memory. The agent can embed text (facts, observations) into a vector and store it, then later perform similarity search to recall relevant information when needed.

Tool/Action Interfaces: These enable the agent to interact with external systems such as search engines, APIs, or code execution environments.

Execution Loop & Controller: The logic that orchestrates the agent. Essentially the code that implements the pseudocode shown above. It manages the flow of planning, acting, observing, and reflecting.

Goal and Task Management: The agent maintains a list of active goals and subtasks, tracking progress and updating as needed.

All these pieces work in concert to create an agentic AI. If you peek into projects like AutoGPT or BabyAGI on GitHub, you’ll find these components reflected in the code: prompt templates for the LLM “brain,” classes for tools and actions, memory buffers or vector store integrations, and a loop driver.

🗓️ Timeline

📦 Closing Thoughts

Agentic AI models have captured imaginations by hinting at a future where AI isn’t just smart, but goal-driven, proactive, and capable of taking initiative. The idea of simply saying, “Please handle this project,” and having an agent autonomously plan, execute, and adapt is a powerful vision.

But today’s agentic models are still in their early days. Reliability is a key challenge. Agents often get stuck, lose track of tasks, or flounder.

🔑 Key Takeaways

- Action: Agentic models don’t just respond; they take action. They plan and act on the environment to achieve objectives.

- Reliability: Agentic agents can steer toward goals, but today’s systems often falter without clearer memory, task tracking, and error handling.

- Safety: With agents that can act in the world, we need deliberate guardrails such as tool whitelists, monitoring, and accountability frameworks to prevent misuse and manage risk.

As these systems evolve, it will be essential to ensure that their autonomy is matched by reliability, responsibility, and safety.